- Published

Llama3 di Google Colab

- Published

- Gaudhiwaa Hendrasto

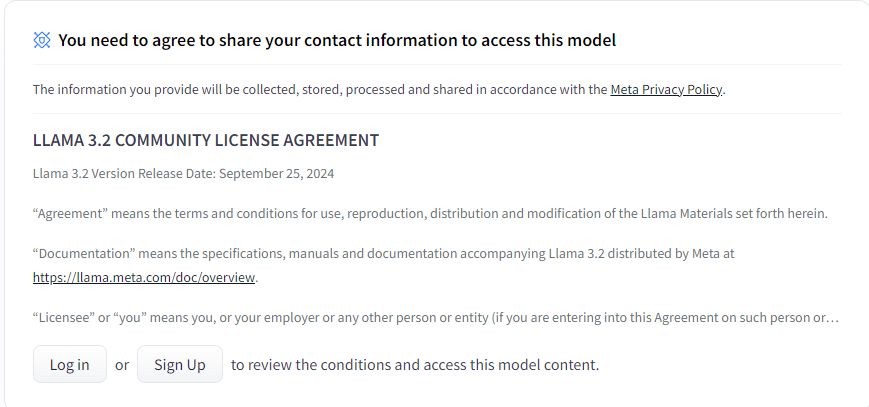

Berikut merupakan link model Llama3 https://huggingface.co/meta-llama/Llama-3.2-1B-Instruct. Pastikan mendapatkan izin untuk mengakses model LLama pada gambar berikut.

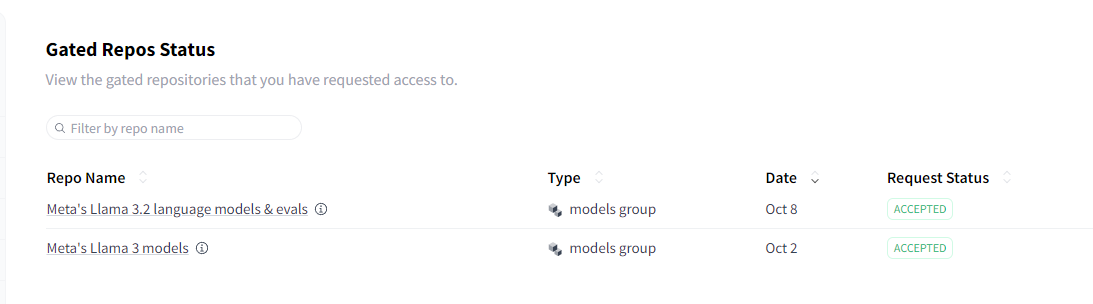

Pastikan status request diterima.

Jalankan command berikut, kemudian masukkan token pada huggingface.

!huggingface-cli login

Kemudian jalankan command berikut serta masukan isi messages yang diinginkan.

# Use a pipeline as a high-level helper

from transformers import pipeline

messages = [

{"role": "user", "content": "Do you know elon musk?"},

]

pipe = pipeline("text-generation", model="meta-llama/Llama-3.2-1B-Instruct", max_new_tokens=50)

pipe(messages)

Berikut adalah hasilnya.

Output:

Hardware accelerator e.g. GPU is available in the environment, but no `device` argument is passed to the `Pipeline` object. Model will be on CPU.

Setting `pad_token_id` to `eos_token_id`:128001 for open-end generation.

[{'generated_text': [{'role': 'user', 'content': 'Do you know elon musk?'},

{'role': 'assistant',

'content': 'Yes, I do know Elon Musk. He is a South African-born entrepreneur, inventor, and business magnate. Born on June 28, 1971, Musk is best known for his ambitious goals in revolutionizing various industries, including:\n\n1'}]}]

Semua code tersedia pada Google Colab

Ditulis oleh Gaudhiwaa Hendrasto